- Published on

Embrace "Let it break"!

- Authors

- Name

- Pramod Kumar

- @pramodk73

It is breaking!

The word "break" is heard very often in software engineering. When a developer makes a code change intentionally or unintentionally, the involved process will stop serving the purpose or result in an unintended outcome. It is clear that a breaking change or code is not encouraged and has to be fixed as soon as possible so the impact is minimal.

There can be multiple possible impacts of a breakage of a system. A few might bring down the entire system down, whereas a few of them might corrupt the data. The result of corrupted data is more dangerous as it is very hard to recover or fix the corrupted data.

The other dimension of these breaking changes is the level of impact they will have. When a few of them bring down a particular service or part of the application, a few others can bring down the entire system. After understanding what these breakages can do to the system, it is a common tendency to write code that does not have any breaking changes. In fact, it has to be that way so the system is consistent and reliable.

The problem comes when people are overly obsessed with the code that does not break ever. Here are a few common patterns I have come across where people try to build a system that does not break and messes up the system in return

Try catch everything

The easiest way to make your changes never break is to wrap it in try block and catch all the exceptions. A concrete example would be that you are building a REST API and you don't want it to break (returning 5XX for most of the web servers) all the time.

# bad

try:

do_something(...)

except Exception:

return 400

# better

try:

do_something(...)

except NotFoundError: # I am explicitly expecting this type of errors

return 400

The misunderstanding is that it will not throw 5XX if something goes wrong and sends back 400 deliberately so that there are no alarms, all green. It is a misconception. There is a reason for 5XX status codes exist. All the logging systems automatically log the unhandled exceptions so that appropriate alarms go off.

The way to handle it meaningfully is by taking a step back and deciding what kind of exceptions we expect it raises. Let us take an example. There is a REST API that returns details of a resource (User/Order). If we are expecting the request can be for a resource that does not exist, we should only be catching (handling) NotFound (assuming some internal system raising it) exceptions instead of all sorts of exceptions. This way, the system will break if there is a legit issue, for example, an issue in the database connection. Consequently, the alarms would go off, and the firefight team will fix it. Otherwise, no one would ever notice that there is some database connection issue in this peace of code.

Not solving the root cause

It is quite common to try fixing the bug as soon as it is reported just at the place. By at the place, I mean just fixing the errors as the stack trace says instead of taking a step back and understanding the root cause of the issue.

Let me explain it by taking a simple example. Imagine you have a function that calculates the scorecard of a student given individual subject marks. This function is called from a user interface or a web application. Suddenly the error count shoots up saying addition is not allowed between a string and a number.

The quickest way of solving this is by type-casting the marks of the individual subject to float or integer. It certainly brings down the error count but does not solve the root problem. Why is the user interface even passing a string instead of a number? Most probably the UI must be passing a string instead of a number in other places too. Just let things break and fix the root cause!

Hindering the issue

Imaging we are building a loan management system, which needs to integrate with multiple payment gateway callbacks so that the repayments are added to the system automatically. When we receive a payment callback we need to add it to a Mongo collection.

# bad

def add_repayment(loan_id, repayment):

loan = get_loan(loan_id)

if loan is None:

loan = Loan() # It does not make sense to receive repayment if loan is not created (contextual)

loan.add(repayment)

# better

def add_repayment(loan_id, repayment):

loan = get_loan(loan_id)

if loan is None:

raise LoanDoesNotExist()

...

The above code is fine until it isn't. Because we are creating a new document if it does not exist, it hides the problem of not having the loan document in the first place. Why would there be a repayment if there is no loan in the system? This means that there is a bug in loan creation. Instead of hiding the issues let them break and fix them fast!

Not releasing frequently

People often release changes in terms of a major release because of multiple reasons. It will increase the chances of breaking as you would be releasing so many untested changes on production at once. Instead, make your deployment process such a way the release cycle is small and release more frequently.

It makes sure that the system does not collapse entirely if there is a breaking change. Because the changes are minimal, the breakages or bugs would also be minimal. Releasing the changes frequently makes sure that only the recent changes have any bugs.

More

There are more to the list which may sound silly but are worth mentioning.

- Silent failures need to be avoided. I have seen developers who just log that something is wrong but don't raise exceptions. They will come back and bite you in the form of corrupted data, broken processes, or maybe in revenue of the business too. Just let the system break!

- The developer should take full ownership of the change. Only then they can let the system break, keep a keen eye on the health of the system, and will be fixing the issues once they are identified.

- Bugs are inevitable. Instead of building a system without bugs, give room for the breaking changes and build the tools to raise the alarms loud enough so that the firefighting team comes and fixes the issues. Fail fast, fix fast!

- Believe in failing fast, fixing fast

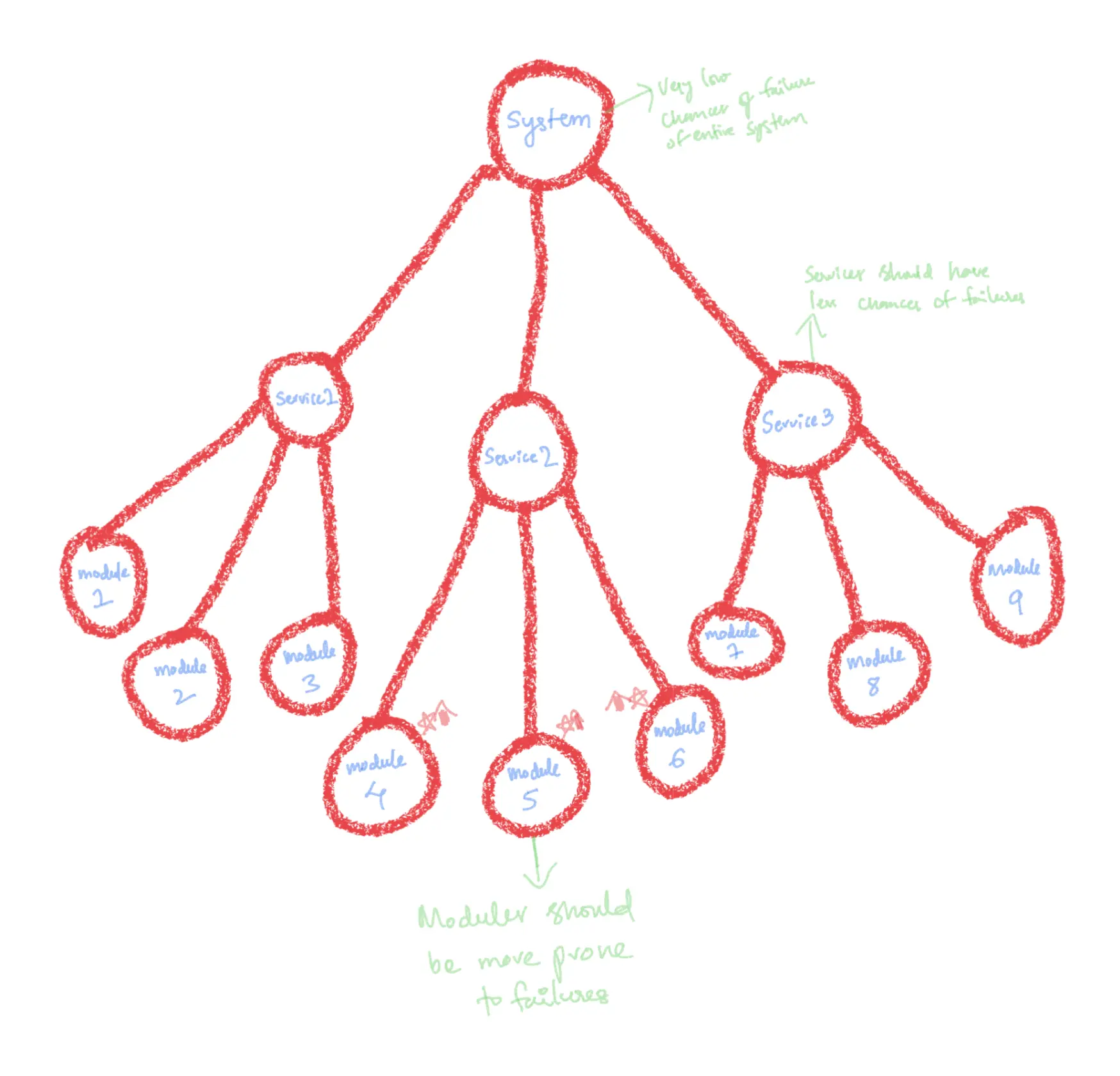

- Breaking changes are sure scary but if the system is in a place where it collapses entirely even for minor bugs, it is a signal that there is a bigger problem in the design of the system.

- Make sure you have a logging system that raises appropriate alarms and informs relevant teams when there is a bug. Expecting the system to fail without these tools is suicidal.

I have written more about how to use exceptions in a separate post. You can read it here

Summary

The system we build needs to run in a very volatile environment. The assumptions we make while building the application will go for a toss once we put it in a production environment. Our system need not and cannot work in all circumstances. It is fine for it to fail in a particular condition or environment if it is not built for it. It is worse if it runs and does not give the expected result. Embrace the failures and build tools to identify and fix them as soon as possible. Next time, recite Let it break and fix it quickly! Cheers!